How do I learn Spark beyond the basics?

Set aside a few hours with my course "Efficient Data Processing in Spark," and you'll be on your way to mastering Spark & building industry-standard data pipelines

You don't know Spark beyond the bare basics

You know that being able to navigate the complexities of Apache Spark concepts can significantly improve your career trajectory and help you land a high-paying, six-figure data engineering job. But you don't know what that level of expertise would look like or how to get there.

You might know the basic syntax and even know how to do some exploratory work with Spark. However, your expertise is not at a level where you can handle complex data processing use cases and make your pipelines run faster and cheaper.

You want to become a Spark expert, but you don't have the opportunity to use Spark in a real-life project to build expertise. You wonder how people gain expertise in Spark. Does the company provide time for them to build expertise, or do they spend their off hours learning Spark?

"There is a steep learning curve to making the most of Spark"

You have tried books, blog posts, documentation, etc, to learn about Spark concepts. Still, they only cover basic API or how to use a particular Spark feature and do not cover the intuition or when or why to use them. There are too many concepts to master:

* metadata

* partitioning

* clustering

* bucketing

* transformation types

* join strategies

* lazy loading

* & so much more!

In addition to all the concepts, you also need to know how these concepts interact and influence each other. Trying to understand all of Spark's components feels like fighting an uphill battle with no end in sight; there is always something else to learn or some new error that you have to Google.

Distributed data processing systems are inherently complex; add to the fact that Spark provides us with multiple ways of customizing how data is processed and stored and, it becomes tricky to know what the right approach is for your use case and when to stop messing with the available options.

You know that having a good grasp of distributed data processing system concepts can help you grow as an engineer and build efficient pipelines that save money (and, in turn, propel your career). Still, it is truly overwhelming to think about how to build a big-picture understanding of these concepts!

You keep waiting for the right project to land on your lap and "somehow" make you a Spark expert!

It's straightforward to use the Spark API. Still, only a Spark expert will know how to make the application run cheaper(cost and performance). You realize it's not straightforward to learn about distributed data processing optimization techniques without encountering a scenario that requires them and having the time to try the optimization techniques.

Even with the right opportunity, running into a data processing issue and trying to optimize it involves:

1. A lot of time.

2. 100s of hours of YouTube videos.

3. Reading the documentation/source code.

4. Experimenting to find the right approach.

It's impossible to experiment and try various optimization techniques when you are on a tight deadline to deliver!

"Recently joined a company using Spark. How do I upskill quickly?"

Say you landed a great job where you will be building pipelines with Spark. Without knowing how distributed data processing systems work in detail, you will:

1. Burn cash running bad queries!

2. Build Spark applications that fail/hang

3. Deliver data late to Stakeholders, leading to erosion of trust

Taking a spray-and-pray approach to Spark optimization can result in vast amounts of wasted time; here is what happens:

You (or the on-call engineer) don't want to wake up at odd hours, pulling out your hair to figure out what went wrong with your Spark pipelines!

Real jobs require more than Spark knowledge. You must know how to build data pipelines with Spark

Things like how data flows through your pipeline, how to model your data, how you organize your code, and how you deliver usable data to usable stakeholders are critical in an actual data project.

Without a good grasp of how to build data pipelines with Spark,

* your pipeline will be challenging to maintain

* your feature delivery speed will slow down to a halt

* you will spend most of your time dealing with your pipeline hanging or failing

* the quality of your data will be a constant source of issues

* and you will burn out

Understanding distributed data processing and storage concepts and how to use them to your advantage can propel your data career to high-paying jobs.

Still, you feel stuck with no opportunities to practice them at work or do not know where to learn them in depth without spending years of your life!

Distributed data processing concepts are, at its core, intuitive

Imagine being able to visualize how distributed systems read and process data; you will be able to build a scalable, resilient data processing application quickly.

If you know the inner workings of distributed data processing systems, all the optimization techniques and new techniques will become immediately apparent. Imagine ramping up on any distributed data processing system (Spark, Snowflake, BigQuery) and its optimization techniques (sorting, partition pruning, encoding, etc.) in hours instead of days!

Reading any data processing code, the performance bottlenecks and potential issues scream at you.

Know the exact steps to squeeze every bit of performance in your Spark application

You know that optimizing a Spark application at its core is about ensuring that the least amount of data is processed and that the cluster is used to its full capabilities!

You will begin to see the optimization techniques (partitioning, clustering, sorting, data shuffling, task parallelism, etc.) as knobs, each with its tradeoffs, and how turning one can affect other parts of the system. As a Spark expert, you will know which ones to turn and how much.

Knowing that all optimization techniques are essentially ways to do one of the following:

1. Reduce the amount of data to process

2. Reduce the amount of data to undergo high-cost processing

3. Push system usage to the max

You will quickly determine the right approach(es) for your use case.

What makes you money isn't understanding Spark or some other framework but knowing how data travels from source to destination and how to make it run as cheaply as possible.

Uplevel your colleagues with your knowledge of data engineering best practices

Your colleagues will be grateful for you helping them uplevel their knowledge of building scalable, easy-to-maintain pipelines!

Your resilient and well-designed data pipelines will improve your team's morale and WLB. Having the foresight to know what may break and how to make your pipeline resilient will put you on a career fast track.

Your pipeline-building skills will help your team deliver features ahead of time and earn you the admiration of your manager.

Mastering Spark internals and distributed data processing optimization techniques requires the right opportunities/mentor and may take years, but it doesn't have to!

By breaking down distributed data processing systems into their fundamental concepts, you can master any data system quickly.

Ramp up quickly on distributed data processing fundamental concepts

Learn the foundational principles of distributed data processing using Apache Spark in my hands-on "Efficient Data Processing in Spark" course.

You will learn Spark API and how Spark works internally.

You'll also learn distributed data processing optimization techniques, their effectiveness, and when to use them (with examples and code running locally).

You will learn:

* how Spark plans to process data by understanding how query plans are built

* how Spark reads data into memory, processes it, and writes it out.

* to inspect Spark's job performance and make changes to ensure Spark's full power is used

Master Spark internals, monitor resource usage, and build a mental model to quickly hypothesize how Apache Spark will process data and verify it with query plan and Spark UI.

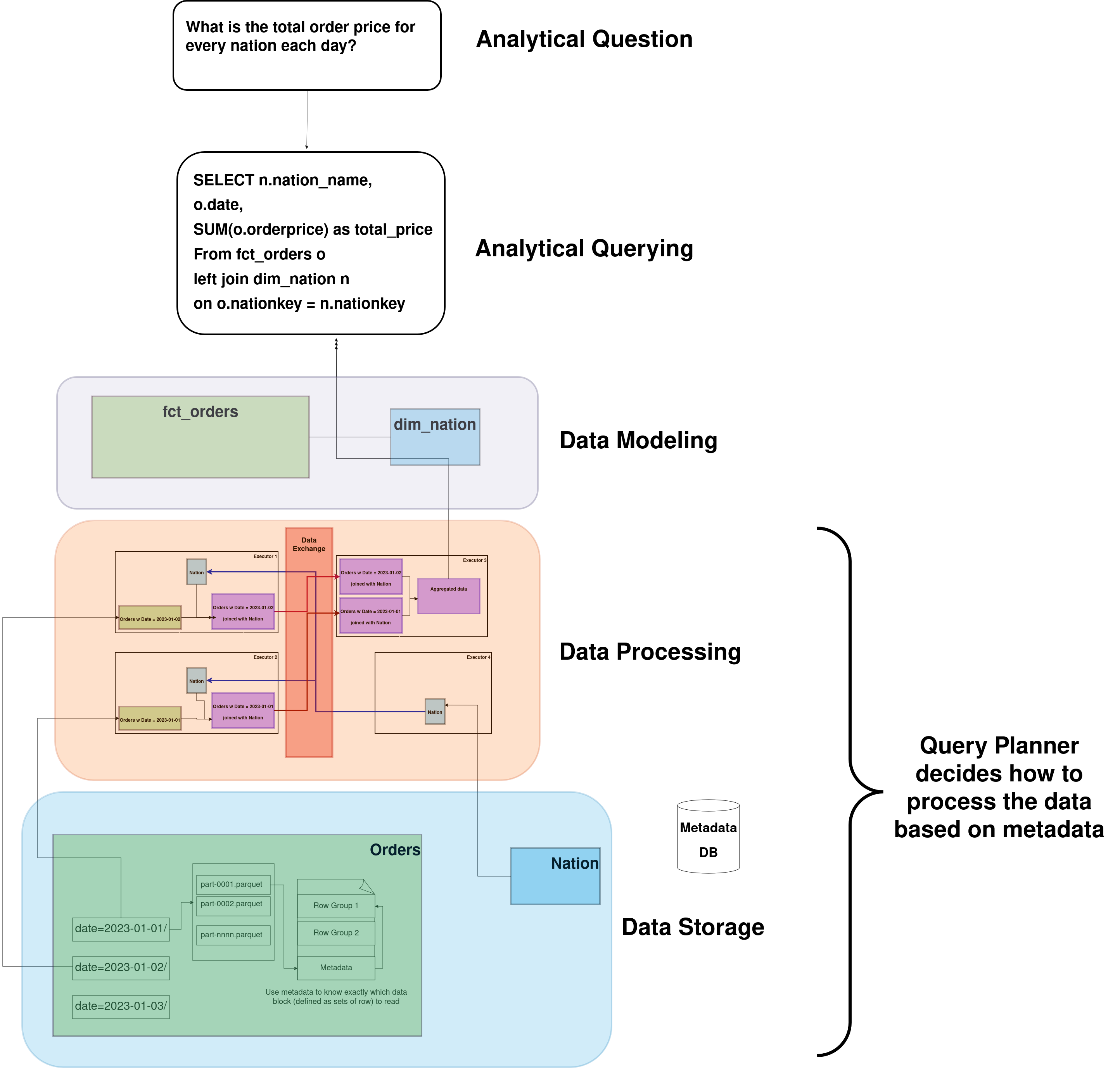

You will inspect and see how Spark develops a plan to process data based on your code and data. You will also see how Spark uses metadata to choose the optimal plan to process data. You will do all this with both Spark SQL and Pyspark APIs.

You will learn how distributed data processing concepts(zoom in on the image below) interact.

Learn about distributed data processing optimization techniques and when (& how) to use them

The course will build your intuition about how data storage and processing techniques work together and how you can tune them to maximize performance.

You can break down optimization techniques into storage and processing systems and identify their tradeoffs.

By the end of this course, you will master the critical optimization techniques that apply to all distributed data processing systems (Spark, Snowflake, Trino, etc.) and be able to choose the appropriate design for your data pipeline.

Build an end-to-end industry-standard data pipeline with Spark (included only with the complete package)

You will build an end-to-end capstone project following industry standard best practices for data transformation, modeling, data quality checks, and testing with PySpark, Delta Lake, Great Expectations, and pytest.

You will start with stakeholder problems (not just requirements), learn how to "know your data," identify ways to model data and design how the data will flow through your system.

In this project, you will also learn about DE best practices, such as separation of concerns, object-oriented programming, and code organization for reusability.

You will build your own ETL pipelining system using Spark!

Understand the fundamental distributed data processing concepts with my "Efficient Data Processing in Spark" course. Start with Spark basics and learn about distributed data processing systems' optimization techniques and their tradeoffs.

Build the skill to visualize in intricate detail how data is processed in a distributed data processing system, starting with a block of data, how and when Spark reads it into memory, ways of encoding data, steps to process data, and finally, writing it out to a storage system.

Set aside a few hours with my course "Efficient Data Processing in Spark", and you'll be on your way to mastering Spark & building industry-standard data pipelines!

Learn Spark beyond the basics, today!

Efficient Data Processing in Spark - E-book

What's included:

E-book

Code for the course

Efficient Data Processing in Spark - E-book & Video

What's included:

E-book + Code

Videos (hosted online) for each chapter, providing extra context in an easy to digest format

Efficient Data Processing in Spark - The complete package

Whats included:

E-book + Code + Videos

Capstone project (additional E-book section and video content)

Discussion (via GitHub) for any data question

Certificate of completion

Any future updates

Course Contents

Efficient Data Processing in Spark Book

In this section, you can download the "Efficient Data Processing in Spark" book to use along with the upcoming video lessons.

Preface

This book is aimed at people looking to learn the core concepts of distributed data processing systems. We will use Apache spark, and learn about its API, Spark SQL, internals, how data is processed, techniques to store data for efficient usage, modeling data for accuracy and ease of use, and go over advanced data processing patterns and templates for common SQL problems.

Lab Setup

Apache Spark Basics

This section will cover distributed systems and how they work. We will delve into the Spark architecture and how executors and drivers work together to process data. We will go over how schemas, & tables are used for data organization. This section also covers Spark Data Processing 101 in-depth, covering all the major functionalities you will need to write data pipelines with Spark. Finally, we will end with a chapter on monitoring Spark cluster resource usage with Spark UI and metrics API.

[Query Plan] Understand how spark processes the data with Query Planner

In this section, we will learn what a query plan is and how to use it. We will also go over how part of Spark code will be declarative (and what declarative programming is). We will also review the spark components that create the query plan and how the spark engine uses the data about our data (aka metadata) to create an efficient data processing plan.

[Data Processing] Reducing data movement between Spark executors is critical to optimization

In this section, we will learn about lazy evaluation and how Spark exploits this for efficient query plans. We will review narrow and wide transformation types and how they impact performance. We will learn how Spark splits our code into jobs, stages, and tasks and how they process data in parallel (& when they don’t). We will end this section with the different join strategies, i.e., how Spark joins data internally and the tradeoffs between different ways of performing join.

[Data Storage] Pre-process data for optimal performance

How we store data impacts every interaction with that data, so data storage is critical. This section will review the standard ways of storing large data sets and where they are stored with their caveats. We will learn about row and columnar formatting and how parquet (columnar encoding + metadata) works. We will review techniques to optimize data reads, such as partitioning, bucketing, and sorting, and their tradeoffs. We will end this chapter by learning about the pros and cons of table formats (with Delta).

[Data Modeling] Organize your data for analytical querying

This section will cover what data warehousing is and how to use it for real-life business scenarios. We will learn steps to understand the data you are working with and how to model them for analytical data loads. We will end by learning one big table (OBT) technique.

[Templates] Common patterns in data processing

In this section, we will discuss how to split complex logic for readability (with CTEs), how to use data from multiple rows without a group by using Windows, and functionality such as de-duping, pivoting, and period-over-period calculations, which are prevalent in real projects.

Capstone Project [Complete package only]

In this project, we will start with requirement gathering, understanding expected outcomes, defining data quality, designing the data pipeline, deciding on code organization, and adding code testing. We will build a complete end-to-end data pipeline following industry-standard data modeling techniques, easy-to-maintain code organization & evolvable data quality checks.

Data Q&A [Complete package only]

Testimonials

Prior to taking the course, I did not have an in-depth understanding of how spark works under the hood- I had only learned some of the syntax.

But now, I have a better understanding of things like join strategies, how storage systems like parquet work, the pros and cons of partitioning and clustering etc.

Stephen

I will surely recommend the book to friends that work in data. The reason been that the book thus far makes it easy to understand concepts and it explains what happens behind the scene when you run a query. This helps in writing optimized queries which in turn helps to save cost.

Oladayo

This is really really really helpful! The kind of stuff you pay college to teach but is never done. Thank you very much!

r/dataengineering

About me

With a proven track record spanning several years in data engineering and software development, I am a results-driven professional dedicated to optimizing data infrastructure and driving business growth.

With about a decade of experience, I have worked for large companies as well as small and medium-sized companies and have seen the struggles and challenges faced by both.

I blog at https://www.startdataengineering.com/

Frequently asked questions

Is this book beginner-friendly?

Yes, please make sure to do the course in order, as the content builds on each other.

What is the refund policy?

If you are unsatisfied, we offer a 30-day money-back guarantee. Please reach out to help@startdataengineering.com.

Does the book contain code examples?

Yes, the book contains code examples which you will use during the course. You will also have access to the git repo containing all the code and examples.

GitHub Repo: https://github.com/josephmachado/efficient_data_processing_spark

What is not covered in this book?

We do not cover streaming, scala, RDD and managing a Spark cluster on the cloud.

I have another question

Please send your questions to help@startdataengineering.com.

How long is the content?

The book is 220 pages long. The total length of the videos are about 2h.

Do I have lifetime access to the content?

Yes, this is a complete at your own pace course so you can buy it now and access it whenever you'd like.

Can I upgrade to a higher tier package from a lower tier without repaying the full price?

Yes, just use the chat button at the bottom right or send an email to joseph.machado@startdataengineering.com to let me know if you'd like to upgrade. I will send you an option to pay the difference for an upgrade.